As excitedly as we talk about it, you’d think the promise of fully autonomous vehicles is right around the bend, just another few miles and we’ll pull into our destination. In truth, we’re not there yet, and a few sceptics even suggest we’ll never get there — too many bumps in the road ahead.

As engineers, we know that we’ll get to the holy grail of autonomous driving. The key questions are how long will it take, what’s the best route and how much will it cost?

Let’s pull out a map and see how we can get there from here in automotive electronics. We know it will require serious engineering feats to integrate all of the different components and deliver seamless safe performance. The good news is we’re perched on a stepping stone right now: advanced driver assistance systems (ADAS), which are seen today in features such as emergency braking assist, drive- and steer-by-wire and collision avoidance. If we want to see this type of advanced technology in every mass-market vehicle, these systems need to be robust, low cost and low power. But this is what the collaborative design chain ecosystem does well and relentlessly optimizes. These core engineering tenets are a compass that will help us navigate the map toward the holy grail of autonomous vehicles.

Open source development for vehicles

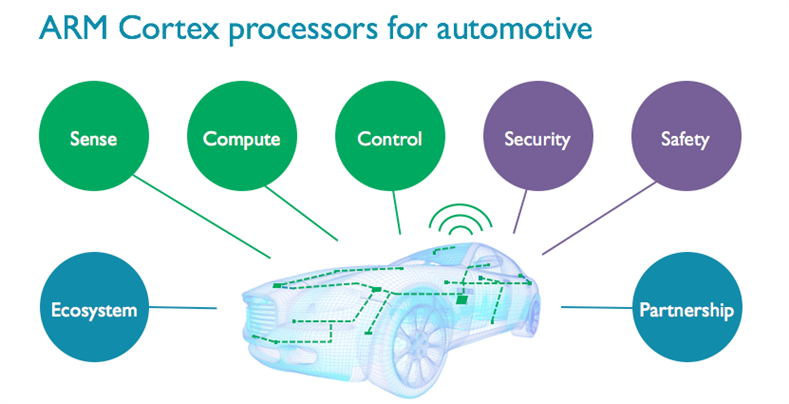

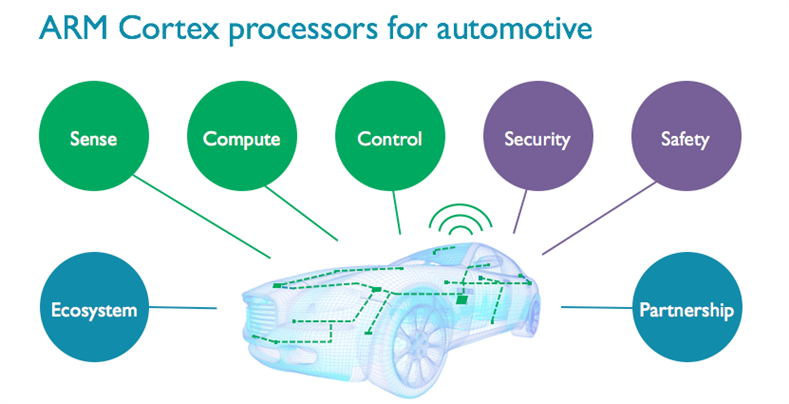

Take for example the recent announcement that Renault is teaming up with ARM to open the software and hardware architecture for its Twizy car. Open source development can enable new features to be developed faster and with more variety. This is an exciting new step for the ARM ecosystem, which offers the widest choice of silicon suppliers. Constantly pushing boundaries, our partners address a range of automotive technology use cases – from the smallest sensor to the highest compute required for both feature-rich in-vehicle infotainment (IVI), and ADAS. The ARM partnership combines the technology IP, tools and software needed to create the scalability, security and safety for the automotive experience of the future.

This is probably the most important time for automotive electronics design. It is the start of a world driven by data and technology where new business models offer choices and new experiences for users. Indeed, the future may be less about the drive, as cars will increasingly drive themselves, but more about comfort-level choices, entertainment and the specific functions owners want from a vehicle. For example, some features that are appropriate for a small city car will look out of place in a family car or an SUV. Enabling multiple suppliers increases the pace of progress and the number of choices.

We’ve just seen an example of how ecosystem innovation is quickening the pace for automotive designers on the road to the autonomous vehicle holy grail. Let’s take a closer look at how the ARM ecosystem is addressing two key areas of automotive, IVI and ADAS, which will be key to the adoption and success of autonomous vehicles.

IVI connects the car to the outside world

A new study from Juniper Research titled “M2M: Strategies & Opportunities for MNOs, Service Providers & OEMs 2016-2021” concludes that over the next five years, data from connected car infotainment and telematics will comprise up to 98% of all M2M (machine to machine) data traffic.

This poses a number of challenges for manufacturers and chip makers for IVI — thermal, area and power constraints, to name a few. These challenges arise from diverse requirements, which involve powering a large screen, enabling tactile feedback, displaying multiple apps coming from multiple sources and so on. Here, the lessons of decades of mobile design are being applied to automotive: ARM’s low power processors, combined with big.LITTLE™ technology, enable the manufacturer to ensure high, sustained performance throughout the IVI experience.

And since IVI connects the user to the outside world, communication needs to be secure, as well as the updates to apps and the vehicle software. Charlie Miller famously gained access to control a Jeep by exploiting a security hole in the car’s head unit, so security is paramount here. Safety is a concern here as well, with many systems requiring ASIL B or higher compliance, due to their impact on driver safety.

Technology choice is crucial as well, since IVI applications include GPS navigation, radio, telematics, video playback, and all of these will have different computing requirements. Selecting the right processor for each task will mean less heat, which in turn reduces the amount of cooling needed on-board, saving weight and cost, and increasing reliability.

ADAS distributes intelligence across the vehicle

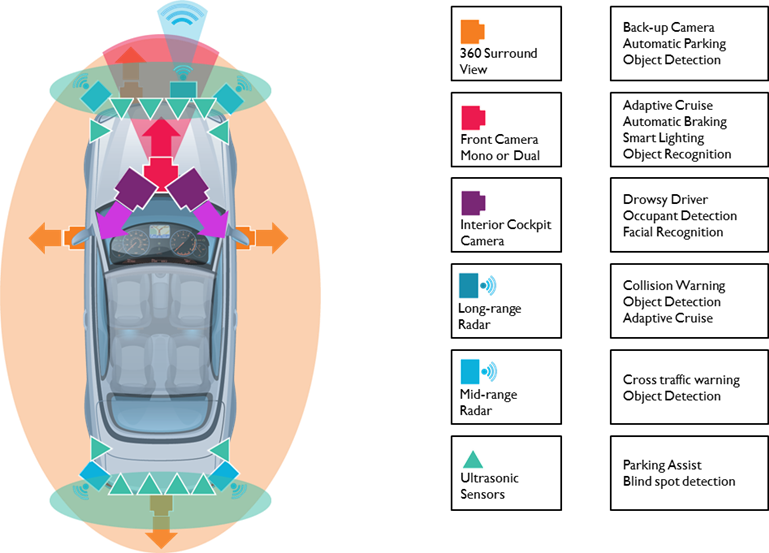

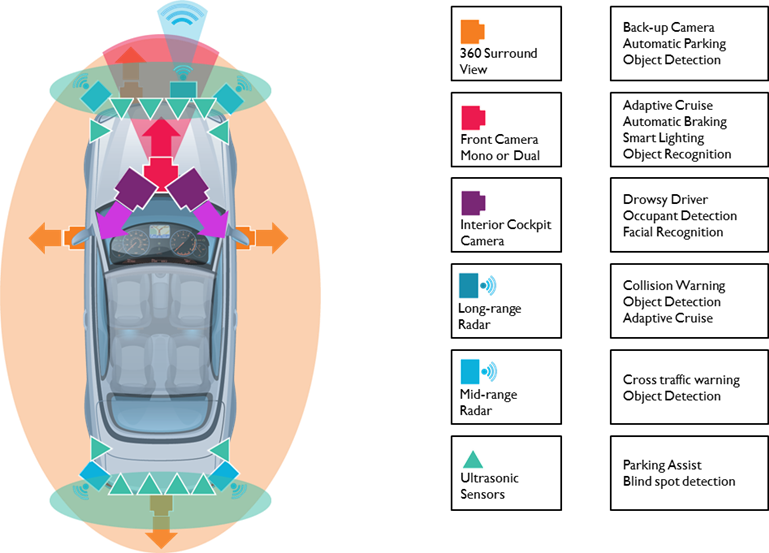

Analysts predict that the on-board computing power of a normal saloon will increase by 100x from 2016 to 2025, powering ADAS and IVI functions. This encompasses a huge computing spectrum, as ADAS combines information from the many sensors dotted all over the car, feeding into a large processing unit that makes sense of the data, and makes decisions in real time. These sensors include radar, lidar, ultrasonic and cameras, all designed to improve driver safety.

Safe operation is vital here, as this is where we as drivers are relinquishing responsibility and giving it to the car itself. That’s why all ADAS components must be designed in line with the highest safety standards, many up to ASIL D.

The range of functions means that a broad ecosystem is required to deliver specialized computing in each area, allowing OEMs the best choice for each vehicle — whether a small city car, a premium saloon or an SUV. For the body components they must be small and highly integrated, but it is still critical they are secure from both external and internal interference. If you depend on your car’s sensors to tell you the lane beside you is clear for overtaking, you want to make sure it cannot be hacked.

Just like we are seeing with IVI, in ADAS specialization for each task is required due to the cost, power and thermal constraints for managing this number of processors. With ARM’s broad portfolio of low-power technology and comprehensive ecosystem built on a standard architecture, OEMs can have the choice about specialization and ease of integration required, no matter the vehicle or specification.

Safety and security are the responsibility of everyone

Safety is critical, due to the potential damage or injury caused when something goes wrong. Likewise, security, seemingly always in the news these days, is top of mind — not only for the same reason, but also because vehicles have a lifespan of 10-15 years. It’s vital to know vehicles will be secure now, and can be kept secure in the future as new attacks evolve.

No one supplier alone can ensure a vehicle’s safety and security. This requires an ecosystem of companies working together, from hardware to software, from operating system to manufacturer. Working with a standard architecture, manufacturers can reduce cost, increase scalability and ensure the quickest time to market.

ARM offers comprehensive security solutions throughout the vehicle, with automotive technology that builds on the trusted security, from sensor to server, successfully deployed in billions of mobile, networking and embedded applications. With an automotive dream-team of safety and security solutions – ISO 26262 support and TrustZone technology – ARM provides a trusted foundation for OEMs and users of the connected car.

ARM technology provides a foundation for the future of automotive

The ARM ecosystem — constantly collaborating on technologies and solutions — is committed to working together to build safe and secure chips that scale to deliver the right performance for each function. That journey you see on the map may appear long, but from tiny radar sensors to powerful IVI and ADAS controllers, the future of driving technology is coming faster than you think.